Amazon Simple Storage Service (S3) is a cornerstone of cloud storage solutions, offering scalability, durability, and unmatched reliability. While S3 is renowned for its straightforward usage, hidden costs can quickly accumulate if you’re not vigilant. One area where these costs can hide is S3 multipart uploads.

In this blog, we’ll delve into the world of S3 multipart uploads, uncovering the potential pitfalls and offering strategies to save you from these hidden expenses.

Understanding S3 Multipart Uploads

S3 multipart uploads are a feature designed to simplify the process of uploading large objects to S3 by breaking them into smaller parts, known as “chunks.” These chunks can be uploaded in parallel, enhancing performance and fault tolerance. Once all the parts are uploaded, S3 assembles them into the complete object.

While this approach offers clear advantages, it also presents opportunities for cost inefficiencies to creep in.

The hidden cost culprits include:

- Part Size Selection: Selecting an inappropriate part size can lead to excess data transfer and storage costs. Smaller parts can lead to higher overhead due to the extra number of requests, while larger parts may result in unutilised storage or unnecessary redundancy.

- Incomplete Multipart Uploads: Leaving multipart uploads incomplete can lead to unexpected storage charges. Abandoned parts occupy storage space until they are explicitly deleted.

- Excessive Part Count: Multipart uploads involve metadata and management operations for each part. An excessive number of parts can inflate operational costs, especially when dealing with a high volume of uploads.

- Inefficient Error Handling: Incorrectly handling errors during multipart uploads can result in additional retries, which means more API requests and potential overage costs.

One frequently encountered issue is the tendency to abandon incomplete multipart uploads within the confines of your S3 storage. Strangely, these abandoned uploads remain hidden within the bucket, rendering them inaccessible for deletion through the AWS Console.

Even utilising the command-line interface (CLI) via ‘aws s3 ls’ fails to reveal these unfinished uploads.

Customer Case Study

During our exploration of cost optimisation strategies, we came across a surprising discovery for one of our valued customers – a whopping 4 TB of hidden multipart uploads. Astonishingly, these uploads had remained unnoticed for the past five years, silently incurring costs. It’s incredible how something hidden from plain sight could add up to a substantial expense of approximately $100 per month, culminating in a staggering total of around $6,000 for these neglected uploads.

Uncovering Ongoing Multipart Uploads

Examine whether there are any multipart uploads present within any of the buckets in your aws account.

Using Code

Perform below steps to check if there are any incomplete multipart uploads in any of your S3 bucket:

- Set AWS credentials using this approach

- Save below script as `check-multipart-upload.sh`

- Update file permissions `chmod +x check-multipart-upload.sh`

- Execute `./check-multipart-upload.sh`

Please be aware that the script below will generate a file for each S3 bucket if there are ongoing multipart uploads associated with them.

bucket_list=$(aws s3api list-buckets) |

Using Storage Lens

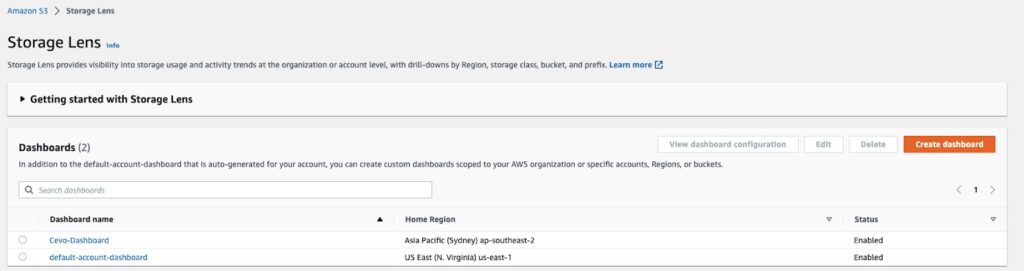

Amazon S3 Storage Lens provides visibility into storage usage and activity trends at the organisation or account level, with drill-downs by Region, storage class, bucket, and prefix.

We will use S3 Storage Lens to discover our AWS accounts and S3 buckets that contain multipart uploads.

We will also be able to see how much data exists as a result of these incomplete multipart uploads. For information on how to set up S3 Storage Lens, click here. If you are setting up a new S3 Storage Lens dashboard or accessing your default dashboard for the first time, be mindful that it can take up to 48 hours to generate your initial metrics.

S3 Storage Lens provides four Cost Efficiency metrics for analysing incomplete multipart uploads in your S3 buckets. These metrics are free of charge and automatically configured for all S3 Storage Lens dashboards.

- Incomplete Multipart Upload Storage Bytes – The total bytes in scope with incomplete multipart uploads

- % Incomplete MPU Bytes – The percentage of bytes in scope that are results of incomplete multipart uploads

- Incomplete Multipart Upload Object Count – The number of objects in scope that are incomplete multipart uploads

- % Incomplete MPU Objects – The percentage of objects in scope that are incomplete multipart uploads

Create Dashboard for your organisation’s region.

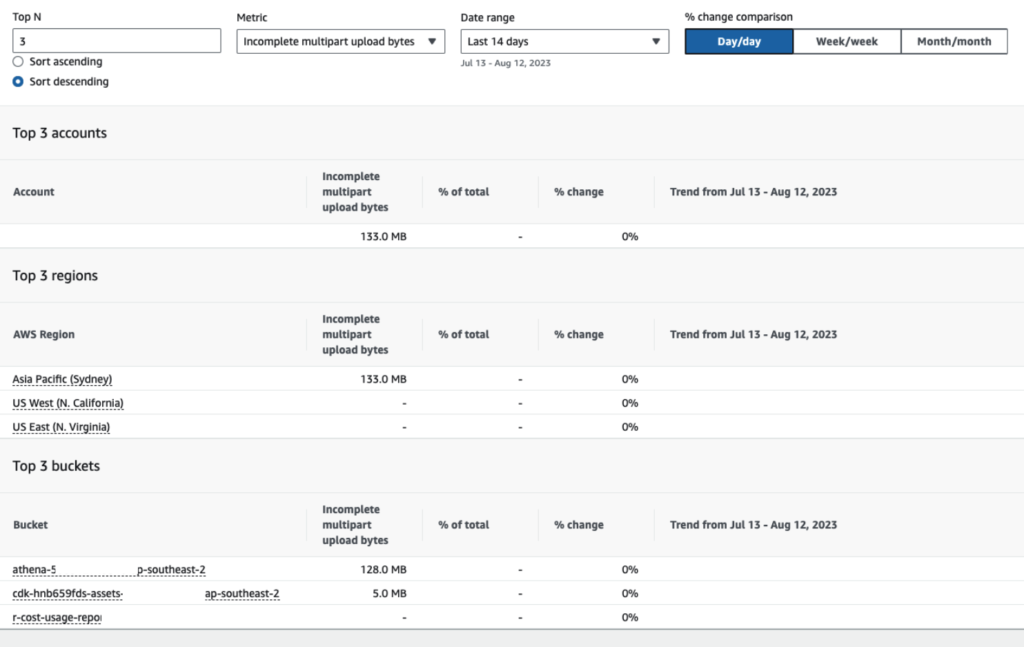

Having accessed the dashboard, we proceed to scroll down to the section labelled “Top N Overview.”

For the Metric selection, we opt for “Incomplete multipart upload bytes.” This metric provides us with insights into the total number of bytes falling under the scope of incomplete multipart uploads.

This enables us to examine the AWS accounts(if the dashboard is created at organisation level), regions, and buckets where incomplete multipart uploads are present. Furthermore, we gain visibility into the volume of data that incurs charges. While the initial findings regarding incomplete multipart upload bytes might not immediately appear significant, it’s crucial to remember that charges persist until multipart uploads are either completed or aborted. Over time, these charges can accumulate substantially.

Resolution

There are multiple effective strategies to manage S3 multipart uploads:

For existing buckets:

- Implement a lifecycle policy: You have two options here:

- Using code: Develop a tailored solution utilising code.

- AWS Console: Leverage the AWS console to set up the lifecycle policy.

- Execute one-time cleanup: A bash script can be employed to perform a comprehensive one-time cleanup.

For new buckets:

- Utilise CloudTrail and Lambda: Create a lifecycle rule for newly established buckets by harnessing the capabilities of CloudTrail and Lambda functions.

These strategies provide distinct paths to tackle the issue at hand, catering to both existing and new buckets.

Add Lifecycle Policy

Add S3 lifecycle policy to all the buckets in the AWS account. More details can be found on AWS documentation.

Using Code

Set AWS credentials using this approach.

import boto3 |

- Save below script as `create-lc-rule.py`

- Execute `python3 create-lc-rule.py`

Using AWS console

While this approach works well for a single bucket, it may not be a scalable solution.

Steps:

- Go to bucket in S3 console

- Click on the Management tab and Create lifecycle rule

- Enter details as below:

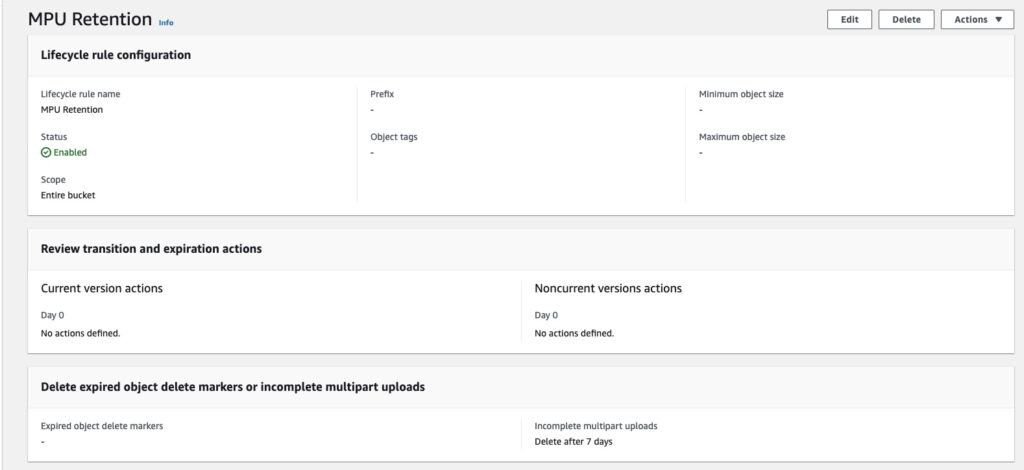

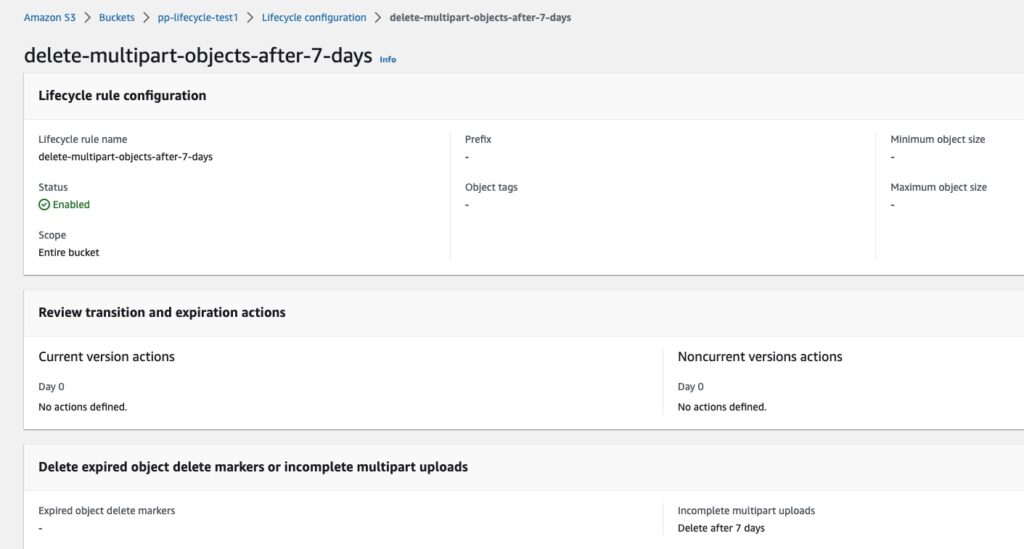

- Enter Lifecycle rule name – MPU Retention

- Select radio button “Apply to all objects in the bucket” and select the acknowledgement checkbox

- Select bellow two options under Lifecycle rule actions

- Delete expired object delete markers or incomplete multipart uploads

- Select checkbox for “Delete incomplete multipart uploads”

- Enter 7 as “Number of days”

- Delete expired object delete markers or incomplete multipart uploads

- Click Create rule

One Time Cleanup

In addition to the implementation of lifecycle, perform one time cleanup using the below script:

Please note the below script will create a file for each S3 bucket and list all the existing multipart uploads and remove them eventually.

bucket_list=$(aws s3api list-buckets) |

Creating a lifecycle rule for new buckets

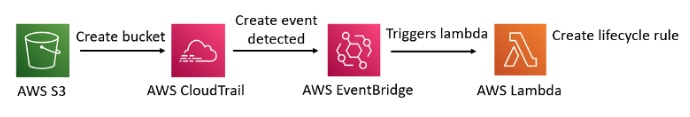

Within this approach, you will establish a lifecycle rule that is automatically applied whenever a new bucket is created.

This employs a simple lambda automation script that is activated each time a new bucket comes into existence. This lambda function enforces a lifecycle rule designed to remove all multipart objects that have reached a 7-day age threshold.

Important to note: Given that EventBridge operates exclusively within the region of its creation, it becomes necessary to deploy the lambda function in each region of your operation.

- Enable AWS CloudTrail trail. Once you configure the trail, you can use AWS EventBridge to trigger a Lambda function.

- Use this Cloudformation template to create below resources required for above workflow:

- Lambda function

- Lambda function IAM role

- EventBridge event triggers for every S3 bucket creation

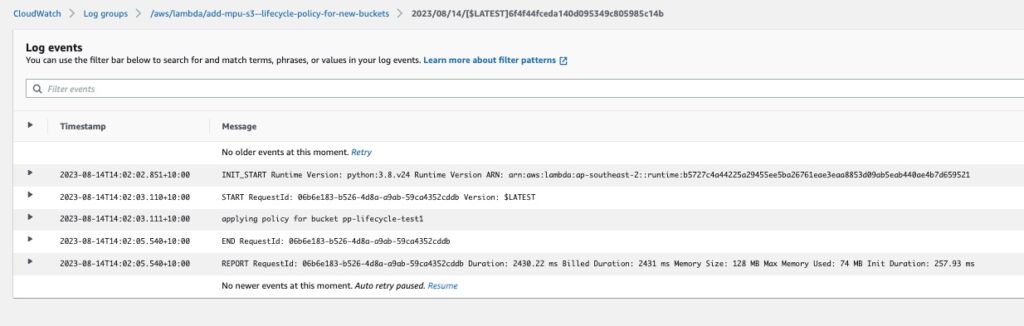

- Create a bucket to check that the lambda function is functioning correctly and check Cloudwatch logs

You’re all set! From now on, whenever you generate a new bucket (within the region you’ve set up), the lambda function will automatically establish a lifecycle for that specific bucket.

Conclusion

Amazon S3 multipart uploads are a powerful tool for efficiently managing large object uploads, but they also come with the potential for hidden costs. By understanding the factors that contribute to these costs and implementing the strategies outlined in this blog, you can significantly improve the management of S3 multipart uploads. As you navigate the cloud storage landscape, staying vigilant about hidden expenses will not only optimise your cost structure but also contribute to a more sustainable and streamlined cloud infrastructure.