Ever wondered how you can seamlessly compare data from a CSV file with information stored in a Postgres database?

What I would normally do is to load the data from the CSV file into a Pandas Data Frame and then query the database to get the data I need. However, I have a large amount of data in the database, and I do not want to load it all into memory.

Another way would be to load the CSV data into the database. Once it’s there it would not be too hard to compare the data. However, we have a SELECT-only permission on the database, and we are not allowed write access, even writing to temporary tables is not allowed. So, how can I compare the data in the CSV file with the data in the database without loading all the data into memory and without needing to write to the database?

Understanding the problem

Given the constraint of not being able to create temporary tables, you will need to import your CSV data dynamically within your SQL query. This can be achieved by constructing the CSV data as a set of values directly within the query.

For example:

I have a csv file which include details about a product:

- Product ID

- Product Name

- Price

- Quantity

- Stock

Sample CSV

https://gist.github.com/merchantmehul/39b6458a999e817118be95cd6cf9adaa

We could carry out this task using CTEs (Common Table Expressions) and some clever SQL tricks.

We will explore how to validate data in a CSV file against a Postgres database without needing to create any temporary tables.

Common Table Expressions

A Common Table Expression (CTE) is a temporary result set that you can reference within a SELECT, INSERT, UPDATE, or DELETE statement. CTEs are defined using the WITH keyword and offer an effortless way to organize complex queries. They are particularly useful for breaking down large queries into more manageable parts and improving readability.

Generating CTEs using Excel:

Initially I thought of creating this using Excel formulas which was quick, but the limitation is that every time we get a new CSV, we would have to recreate it for a new format.

For example,

Auto-generating CTE’s using Pandas:

Using PANDAS data frames, we can easily auto generate this CTE.

The steps to automate are

- Reading the csv file into a data frame

https://gist.github.com/merchantmehul/e0908a51d90d6beb48a866f434eb3348

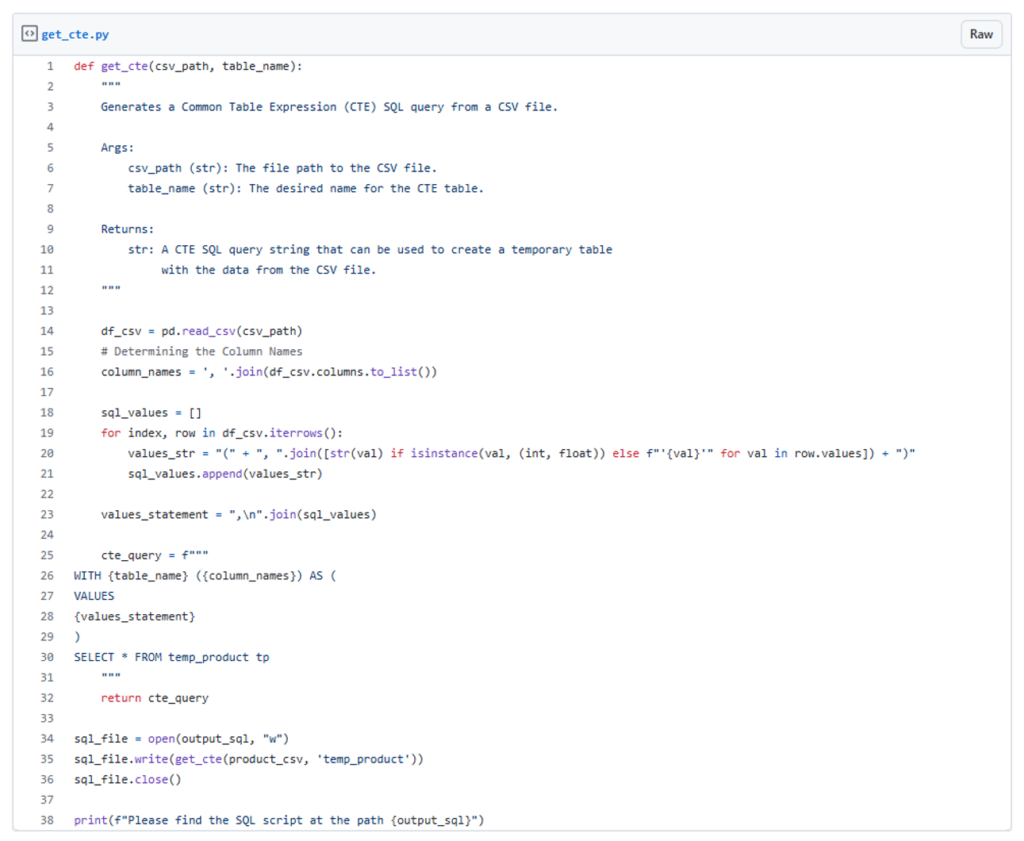

Function get_cte() the below

- Creating values statements

- Combine the statements with “WITH” clause to generate CTE.

https://gist.github.com/merchantmehul/092d82060100b08d2295ddc6d83e570b

The CTE generated by the get_cte function can then be used in your favourite SQL client to instantiate the CSV contents in SQL memory.

Validating the CSV Data against the PRODUCT table in DB

The generated query for the CTE dataset can be combined with any table in the database, allowing for versatile and comprehensive data analysis across various datasets.

The example below show that an exact match exists in the database; hence, it displays no results.

Conclusion

CTEs allow you to create temporary result sets within your SQL queries, making data comparisons straightforward and efficient – all without needing write permissions.

But the magic does not stop there. Enter Python and its powerful Pandas library. Pandas transforms data handling and manipulation into a breeze, allowing you to effortlessly load, process and convert CSV data into SQL-compatible formats. This is especially handy when preparing your data for validation against database records.

By harnessing the power of SQL’s CTEs and Pandas, you can turbocharge your data validation process. This dynamic duo ensures your data is correct and consistent, even with restricted database permissions. You are not just confirming data; you are using the strengths of both SQL and Python for a seamless, robust data analysis experience.

Here is a quick recap of the steps:

- Load and preprocess CSV data using Pandas, handling missing values, type conversions, and data cleaning with ease.

- Generate SQL-friendly formats from the processed CSV data, ready for seamless integration into your SQL queries.

- Use CTEs in SQL to create temporary views, simplifying the comparison of CSV data with database records.

- Execute and analyse the SQL queries to confirm your data.

By combining these powerful tools and techniques, you empower yourself to perform comprehensive data validation and analysis, ensuring the integrity and reliability of your datasets. Hope your data validation process gets a whole lot smarter and more efficient!