AWS re:Invent 2025 delivered one of the most transformative waves of innovation we’ve seen in years. These weren’t just incremental updates, but foundational leaps that reshape how we build, automate, and scale on the AWS cloud.

From agent-driven development and next-generation AI infrastructure to major breakthroughs in data, security and serverless, AWS has clearly shifted into a new era of builders’ tooling. Whether you’re leading platforms, shipping products, running ML workloads or modernising operations, this year’s announcements redefine what’s possible and what will soon become the new normal.

Below is a curated breakdown of the biggest releases you need to know and why they matter.

Table of Contents

AI platforms, Agents & the Developer Experience

AWS leaned hard into an agent-first developer experience this year. Amazon Bedrock AgentCore was introduced as a fully managed runtime for production AI agents, alongside new “frontier agents” such as Kiro (virtual developer), plus AWS Security Agent and AWS DevOps Agent designed to work through common SDLC and operational tasks. To support custom agent development, AWS expanded Strands Agents with TypeScript, CDK integration and edge deployment, making it easier than ever to build reliable, domain-specific agents.

This is great because AgentCore and Frontier Agents finally take over all the repetitive coding and ops tasks we normally burn hours on, so we can ship features faster and give customers improvements in days instead of weeks.

Models & Customisation: Nova, Bedrock & SageMaker AI

AWS made model choice and customisation a lot more practical this year. The new Nova 2 model family and Nova Forge, introduce “open training” style customisation using pre-trained checkpoints and curated datasets. Nova Act is specialised for UI and browser automation.

On top of that, Bedrock Reinforcement Fine-Tuning (RFT) enables alignment-style tuning for domain accuracy, while SageMaker AI now supports serverless customisation, cutting experimentation cycles from months to days.

The takeaway is simple: it’s now easier to tailor AI to real business context without a heavy ML ops lift, so customers can roll out smarter, more relevant experiences faster.

Trainium3 UltraServers: Next-Generation AWS AI Silicon

AWS unveiled Trainium3 UltraServers, a new 3nm AI accelerator platform enabling frontier-scale model training and high-throughput inference. With massive performance and efficiency improvements over Trainium2, these systems are positioned to power the next generation of cost-efficient LLM workloads both inside AWS and for early adopters like Anthropic.

This will:

Lower cost to train and run big models, making advanced AI use cases more commercially viable

Higher performance per watt, which helps at scale when workloads run continuously

More headroom for ambitious features, unlocking AI capabilities that were previously hard to justify on budget

New NVIDIA GB300 UltraServers on EC2

AWS introduced P6e-GB300 UltraServers, based on NVIDIA’s GB300 NVL72 architecture, enabling ultra-large model training and high-bandwidth reasoning at scale. These instances bring frontier GPU performance into the EC2 ecosystem with deep integration into Nitro, EKS, and AWS’s ML stack.

These servers are amazing as they will:

Unlock extreme GPU scale on demand, without specialised hardware investment

Accelerate training and high-throughput inference, especially for large reasoning workloads

Simplify production deployment, thanks to tighter integration with EKS, networking and the broader AWS ML toolchain

AWS AI Factories for Sovereign & Regulated Workloads

AWS AI Factories allow organisations to deploy managed, hyperscale AI infrastructure inside their own data centers. These combine Trainium, NVIDIA GPUs, AWS networking, storage, databases, Bedrock, and SageMaker into a fully managed “private AWS region,” ideal for sovereign workloads and regulated industries.

AI Factories are a game-changer for our regulated customers as they will:

Enable modern AI adoption while keeping data on-prem, supporting sovereignty and stricter regulatory needs

Bring AWS-managed AI infrastructure behind the firewall, reducing operational burden for customers

Accelerate time-to-value for regulated use cases, without compromising control, governance, or residency requirements

Amazon S3 Updates: Vectors, 50 TB Objects and Data Lake Enhancements

Amazon S3 received major AI-era upgrades: S3 Vectors (high-scale vector search built into S3), 50 TB object support for massive datasets, 10× faster S3 Batch Operations, and enhancements to S3 Tables for Apache Iceberg like Intelligent-Tiering and cross-account replication.

We love this, because S3 is basically becoming an AI-native database, which will:

This will:

Make vector search more native and scalable, reducing the need to stitch together extra services

Support much larger datasets more simply, especially for AI and analytics workloads

Speed up large-scale data operations, improving throughput for batch processing and lakehouse management

Lambda Upgrades: Durable Functions and Managed Instances

AWS Lambda now supports durable functions, enabling long-running workflows that persist for up to a year. Lambda Managed Instances give developers EC2-level control (hardware, networking, pricing) with the ergonomics of Lambda which is ideal for mission-critical pipelines and predictable workloads.

This will:

Enable long-running, stateful workflows in serverless, without redesigning architectures

Give better cost and performance control, while keeping the simplicity of Lambda

Speed up delivery for event-driven and AI workflows, especially where orchestration and reliability matter most

Networking & Multicloud: AWS Interconnect with Google Cloud

AWS Interconnect – multicloud, built in partnership with Google Cloud, delivers private, high-bandwidth links between clouds using an open spec and coordinated operations, pushing multicloud from DIY networking to a fully managed experience.

This will:

Finally make multicloud sane, eliminating the historical networking pain between AWS and GCP

Allow teams to combine services from both clouds without complex workarounds or fragile architectures

Enable customers to adopt true best-of-both-worlds solutions that maximise performance, resilience and choice

Security Enhancements: GuardDuty ETD, Security Hub Analytics and IAM Policy Autopilot

GuardDuty now provides Extended Threat Detection for EC2 and ECS, correlating host- and container-level signals into actionable, MITRE-mapped findings. Security Hub adds real-time risk analytics across AWS security services. IAM Policy Autopilot, an open-source MCP server, generates least-privilege IAM policies directly from your code.

This will:

Reduce noisy alerts and cut through security signal overload

Automate least-privilege IAM, replacing manual policy tuning with intelligent defaults

Strengthen overall security posture while significantly reducing operational burden

Help teams avoid late-night fire drills by surfacing only the issues that actually matter

Customer Experience: Amazon Connect Agentic AI

Amazon Connect now includes agentic AI for both self-service and human-assisted workflows. With Nova-powered speech models, Connect can automate tasks, generate documentation, analyse sentiment, and assist human agents with context-aware recommendations.

This will:

Deliver quicker, more natural support for customers through agentic AI

Provide human agents with real-time guidance and next-best actions

Reduce wait times and boost service quality immediately

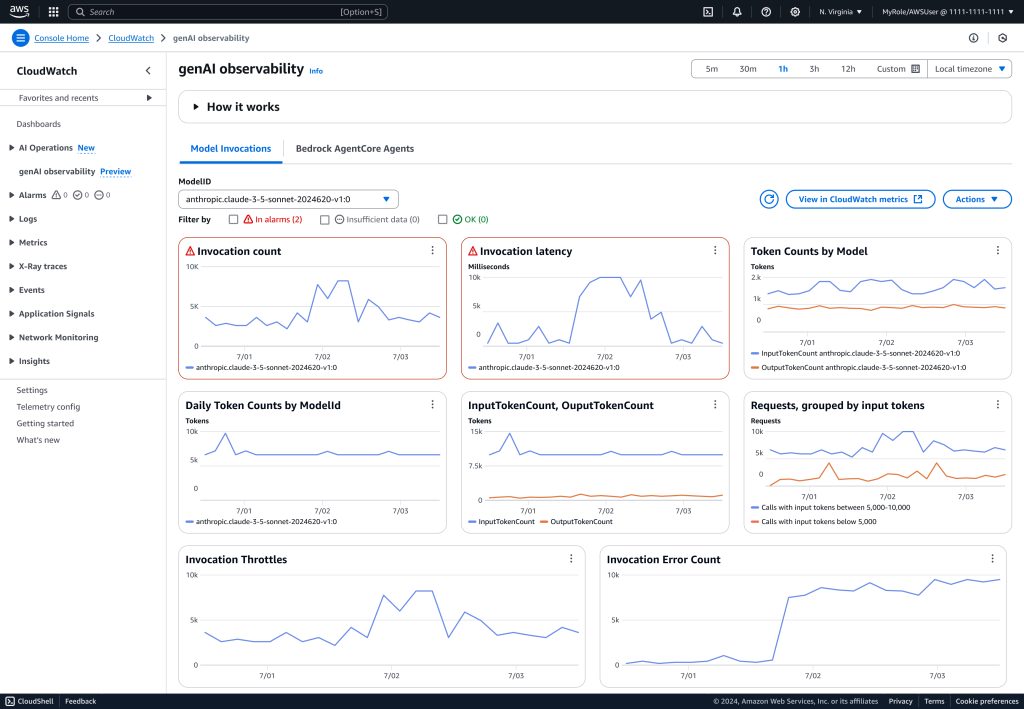

AI-Native Observability: CloudWatch and Related Services

CloudWatch gained AI-native observability for agents and LLMs, automatic application topology discovery, AI-generated incident reports, and expanded cross-account/Region telemetry via Logs Centralisation and Database Insights.

This will:

Automate much of the detective work normally done by engineers

Spot issues earlier, identify root causes and analyse incidents faster

Improve system reliability and reduce time to recovery for customers

Data Collaboration & Core Services: Clean Rooms, EKS, Route 53 Global Resolver, Partner Central

AWS Clean Rooms introduced Clean Rooms ML synthetic data, a new capability that lets multiple parties collaborate on machine learning without sharing raw data. It generates noisy, de-identified synthetic datasets that preserve the statistical patterns of joint datasets (e.g., retailers + brands), enabling compliant ML training while reducing re-identification risk. Beyond data collaboration, AWS added new EKS capabilities for managed workload orchestration and cloud resource governance, launched Route 53 Global Resolver to unify DNS resolution across hybrid and multicloud environments, and integrated AWS Partner Central directly into the AWS Console for easier partner and marketplace management.

This will:

Allow teams to collaborate on shared datasets without exposing sensitive information

Enable new joint modelling and analytics opportunities with partners

Unlock data-driven projects that were previously impossible due to privacy constraints

Updated AWS Support Experience

AWS reorganised support into Business Support+, Enterprise Support, and Unified Operations, with AI-powered assistance, faster response times, deeper security integration, and alignment with the new AI-driven operational tooling.

This will:

Provide faster SLAs across incidents and operational requests

Use AI-powered troubleshooting to accelerate diagnostics and resolution

Improve uptime and reduce delays, delivering a smoother customer experience

Conclusion

AWS re:Invent 2025 marks a turning point for builders on AWS, with breakthroughs across AI, compute, serverless, data, security, and operations that directly improve how teams design, deploy, and scale applications:

- AI becomes first-class with AgentCore, frontier agents, and expanded Bedrock/Nova models, giving developers production-ready AI building blocks instead of DIY glue and model customisation gets easier thanks to Nova Forge, RFT, and SageMaker AI serverless customisation, making domain-specific AI accessible without ML ops complexity.

- Lambda steps up with durable functions and Managed Instances, enabling long-running AI workflows and cost-optimised compute without losing serverless ergonomics.

- Multicloud networking with AWS Interconnect makes cross-cloud architectures reliable, high-bandwidth, and finally simple.

- Security shifts left and gets smarter with GuardDuty ETD, Security Hub analytics, and IAM Policy Autopilot, thus reducing noise and tightening least-privilege by default.

- CloudWatch’s AI-first observability and improved support tiers give teams faster insights, better incident response, and more intelligent operations.

Across the board, these releases remove friction, automate complexity, and give builders more powerful primitives. Developing on AWS becomes faster, safer, more scalable, and far more AI-native than ever before, setting the stage for how modern applications will be built in the years ahead.

The AWS re:Invent 2025 announcements open the door to a new era of AI-native, high-performance cloud engineering. If you’re ready to explore how agentic AI, Trainium3, S3 Vectors, multicloud networking or the latest serverless and security capabilities can accelerate your delivery, Cevo can help.

Whether you’re scaling platforms, modernising workloads, or building out new AI-powered capabilities, our consultants partner with your team to design solutions that are robust, secure and future-ready.

Move faster with confidence. Reach out to Cevo to start the conversation.

Nicolas Foulon is a cloud consultant and senior developer specialising in solutions architecture, back-end systems, and modern cloud ecosystems (AWS and Azure). He excels at designing and delivering scalable, high-performance solutions that transform complex requirements into measurable business outcomes.